It’s surprising that such a simple function works very well in deep neural networks. The function is very fast to compute (Compare to Sigmoid and Tanh).

The output of ReLU does not have a maximum value (It is not saturated) and this helps Gradient Descent.The ReLU function is continuous, but it is not differentiable because its derivative is 0 for any negative input.So, the ReLU function is non-linear around 0, but the slope is always either 0 (for negative inputs) or 1 (for positive inputs). A function is non-linear if the slope isn’t constant. Graphically, the ReLU function is composed of two linear pieces to account for non-linearities.The plot of ReLU and its derivative (image by author) The function returns 0 if the input is negative, but for any positive input, it returns that value back. The Rectified Linear Unit (ReLU) is the most commonly used activation function in deep learning. To apply the function for some constant inputs: import tensorflow as tf from import tanh z = tf.constant(, dtype=tf.float32) output = tanh (z) output.numpy() 3. To use the Tanh, we can simply pass 'tanh' to the argument activation: from import Dense Dense(10, activation='tanh')

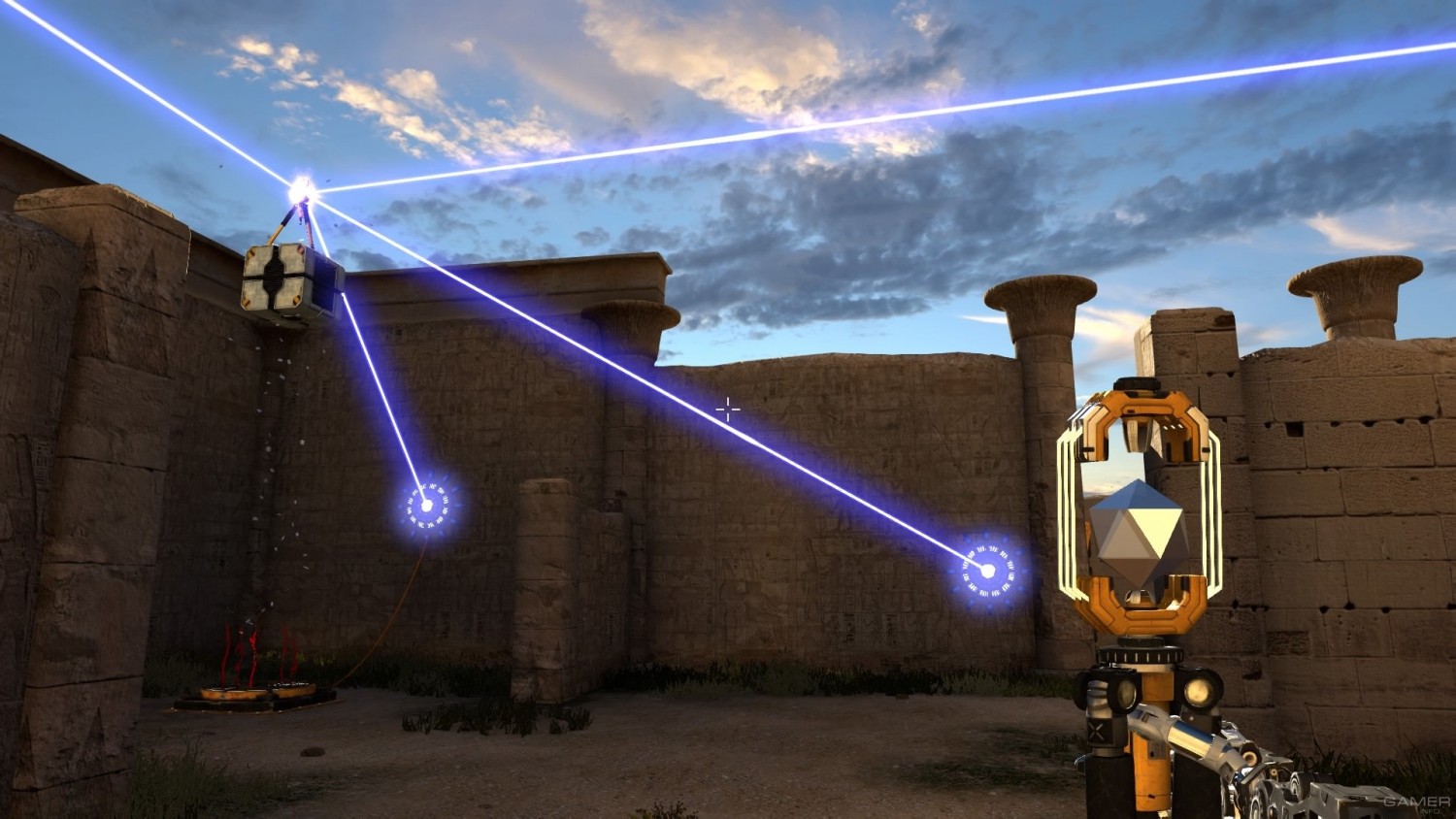

OID THE TALOS PRINCIPLE IMAGES HOW TO

How to use Tanh with Keras and TensorFlow 2 Thus it has almost no gradient to propagate back through the network, so there is almost nothing left for lower layers. Vanishing gradient: looking at the function plot, you can see that when inputs become small or large, the function saturates at -1 or 1, with a derivative extremely close to 0.Since Tanh has characteristics similar to Sigmoid, it also faces the following two problems: One important point to mention is that Tanh tends to make each layer’s output more or less centered around 0 and this often helps speed up convergence. Tanh has characteristics similar to Sigmoid that can work with Gradient Descent.

Problems with Sigmoid activation function It was a key change to ANN architecture because the Step function doesn’t have any gradient to work with Gradient Descent, while the Sigmoid function has a well-defined nonzero derivative everywhere, allowing Gradient Descent to make some progress at every step during training. The Sigmoid function was introduced to Artificial Neural Networks (ANN) in the 1990s to replace the Step function. The function is monotonic but the function’s derivative is not.That means we can find the slope of the sigmoid curve at any two points. The output of the function is centered at 0.5 with a range from 0 to 1.The function is a common S-shaped curve.The plot of Sigmoid function and its derivative (Image by author)

0 kommentar(er)

0 kommentar(er)